The Library of Pulau Saigon, Now at 2219: Futures Imagined (ArtScience Museum)

The Library of Pulau Saigon, Now at 2219: Futures Imagined (ArtScience Museum)

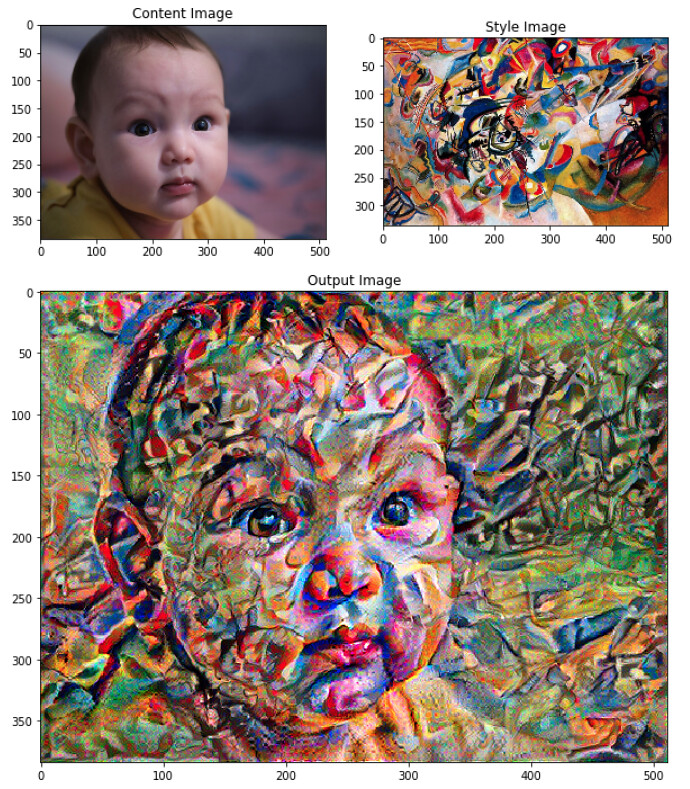

Over the years I've often told a story about an apocryphal encounter I had with a certain glass case full of items from Pulau Saigon at the ArtScience Museum, back in 2011...

Back then as a designer, I had been working on some interactive educational games for the education team at ArtScience Museum, and I had an opportunity to also show my own interactive artwork about the Singapore River - in a large cavernous space at the end of the huge Titanic show - a section about Singapore during the time of the Titanic. I was very much delighted to be able to show a work about the Singapore River next to some actual artefacts dug up from the Singapore River (loaned by Prof John Miksic). At the time I knew very little of the history of the islet - except the fact that, well.. not very much was known about it, and that it was plainly visible in some portions of my interactive (which had been based on old maps of the Singapore River).

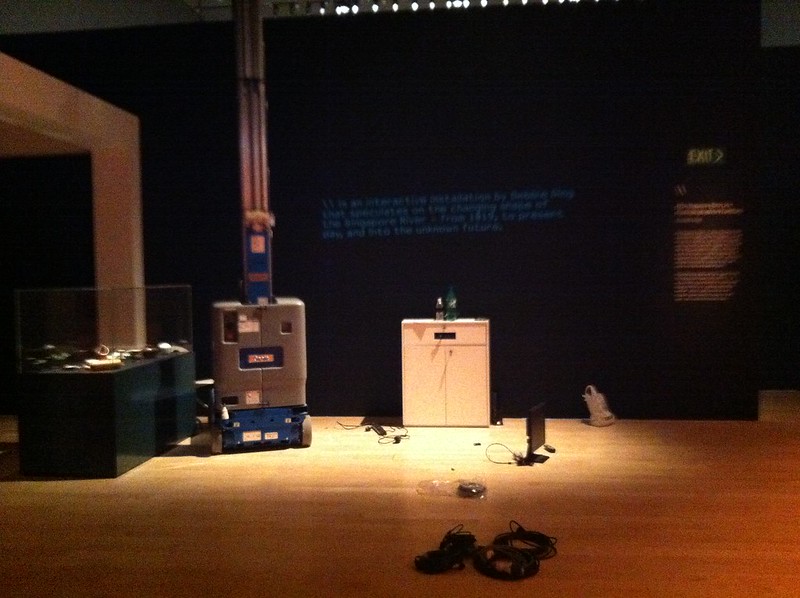

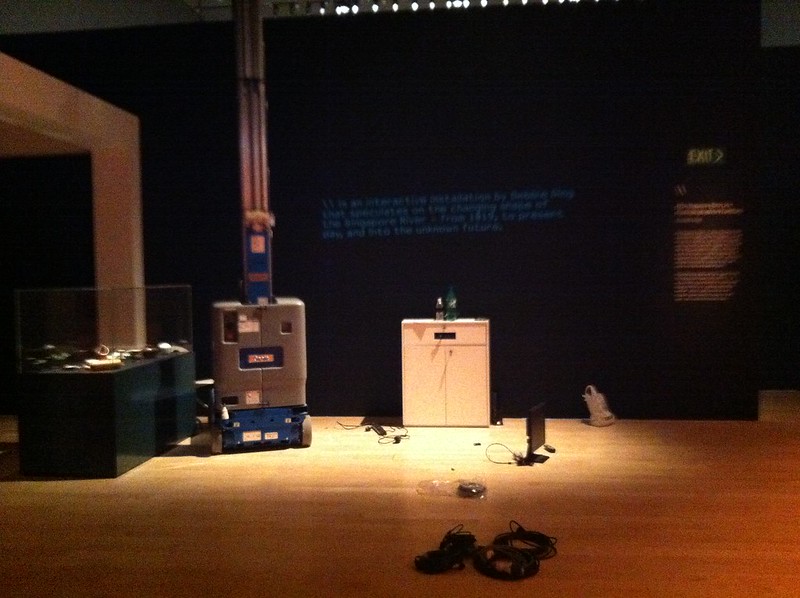

I don't really know what I should have expected, but the items were much tinier than I had imagined them when Angeline first told me about them. I recall feeling somewhat underwhelmed by its scale; they were entirely dwarfed by the space. I remember being somewhat confused by the label; and even though they were not my things, I began to feel worried that people would not understand them, or want to understand them. Audiences today have so much media fighting for their attention - they want to be entertained by easily consumable chunks of entertainment; right before this there was the spectacle of the TITANIC! TITANIC! READ ALL ABOUT IT! Could we really get audience to spend time and energy contemplating and thinking about this poky little vitrine full of tiny, rough, broken, complex things which might take more time to understand?

Anyway, I thought about how I used to obsessively photograph everything even back then. So why had I never searched in my own archives for photos of this purported vitrine that I saw in 2011? So I went back into my photo archives and... successfully dug up these photos!

BACK IN 2011: NOTE THE GLASS CASE ON THE LEFT OF THIS IMAGE!!!

BACK IN 2011: NOTE THE GLASS CASE ON THE LEFT OF THIS IMAGE!!!

BACK IN 2011: Pulau Saigon Artefacts at the ArtScience Museum

BACK IN 2011: Pulau Saigon Artefacts at the ArtScience Museum

Part of my desire to make "The Library of Pulau Saigon" stemmed from that encounter with that problematic vitrine. So it feels quite fitting that a copy of this work is finally making an appearance at ArtScience Museum - in the new "2219: Futures Imagined" exhibition.

In terms of how the work is made, I've always been surprised how far hand waving gets you. The truth of the matter is that models are made from sampling Google Images and me finding individual (and sometimes different) methods to reproduce those objects in 3d by writing Openscad scripts to generate models. Some were straightforward like just producing svg outlines of objects and transforming them into 3D but others involved more... er.... creative coding. As an artist I might like to say that its the machine helping me along in the creative craftsmanship of the object, but actually I'm in the back hitting the computer with a big stick shouting "COMPILE, DAMMIT, JUST COMPILE MY CRAPPY CODE!"

This time around I decided I also wanted to generate lots of gifs showing the process in order to supplement the existing physical work which I got onemakergroup to help me reprint. Why didn't I do this earlier? It seems people are always drawn to the screenshots of my openscad files for this, although frankly speaking if you are a techie person then you will quickly see that a LOT of intervention has gone into the making of the objects (whilst I'm cheeky enough to say that its an unforgetting machine that is making it, to a great extent the hand and the subjectivity of Debbie the artist is obviously written over all the objects)...

THE GIF FACTORY

Since I did my project in 2015, Openscad has since gotten many more features including an "animate" feature - except that what it does is to render out frame by frame and you still have to compile everything together by yourself, so in the interests of time this wasn't the method I wanted to use. (But if you did want to use Openscad to generate frames that you could compile into an animation, you can look at the default example within Openscad. You just have to create a value $t and then to start the animation, select View > Animate and enter some values into "FPS" and "Steps", like this below)

Step 1: Automatically open and resize application window to specific size and position

Step 1: Automatically open and resize application window to specific size and position

First I figured out how to write an Applescript to resize windows so I can screen-capture them quickly. The following Applescript uses assistive access to resize and reposition the window of any app - including 'unscriptable' apps - but you'll need to allow Script Editor to control your computer in System Preferences. You can change the numbers to fit the size you require. In my case I wanted to screencap it at 1024 x 768 but for some reason my screenshot app Monosnap does not start the capture at 0,0 so I adjusted it to fit (pixel by pixel). I also only wanted the app's content so I added 2px to height and width.

Applescript to resize app window and set position:

set resizeApp to "OpenSCAD"

set appHeight to 770

set appWidth to 1026

tell application "Finder"

set screenResolution to bounds of window of desktop

end tell

tell application resizeApp

activate

reopen

end tell

tell application "System Events"

tell process resizeApp

set the size of front window to {appWidth, appHeight}

set the position of front window to {5, 0}

end tell

end tell

Step 2: Screen video

I just used

Monosnap (Free, Mac/Win) for this.

Step 3: Convert mp4 to animated gif

To convert the mp4 files into animated gifs, I used

Gif Brewery 3 (Free, Mac). What is it about the palindrome loop (boomerang) that works so well?

Anyway I'm glad to have worked out a faster workflow for creating gifs quickly and maybe next time every other image I upload to my blog or website ought to be an animated gif!!!