This week as part of my work I went to a 2-day crash course in Tensorflow for NLP, which is admittedly ridiculous because (a) 2-days? what can one accomplish in 2 days? would we not be better off slowly studying ML via a mooc on our phones? or the Google Machine Learning Crash Course? and the official Tensorflow tutorials? (b) I am struggling with both the practical side (I have absolutely no maths foundation) and theorectical side (I don't even understand regression models, but, I mean, do I need to understand regression models anyway?)

Which then begs the question: DO I REALLY NEED TO PEEK INSIDE THE BLACK BOX IN MY LINE OF WORK?

Or, WHAT IS MY LINE OF WORK ANYWAY? And how much technical understanding do I really need to have?

Now I obviously don't feel like I'm in any position to design the innards of the black box myself, but I'd like to be the person who gathers up all the inputs, preprocesses it, and stuffs it through the black box myself, so as to obtain an interesting and meaningful output (basically I'm more interested in the problem framing). But existential crises aside, this post is to gather up all my thoughts, outputs (ironically unrelated to the course I was at, but this is a personal blog anyway), and relevant links for the time being (pfftshaw, with the rate at which things are going they'll probably be outdated by 2020...)

Jupyter Notebook

Jupyter Notebook is the wiki I wish I always had! Usually when working in Python you're always in the shell or editor and I make my wiki notes in a linear fashion to recount the story of what I was doing (in case I want to revisit my work at a later point). For the purposes of learning I find it most useful to think of it as a linear narrative.

Jupyter is the new shell where you can do precisely that - write a linear narrative of what you think you were doing - alongside the cells of your code that you run. Its generally quite easy to set up Jupyter notebook via Anaconda which will install both Python and Jupyter Notebook and then you can paste the link from terminal into your browser.

I could have embedded my notebooks instead of screenshotting it but I ain't gonna share my notebooks cos these are just silly "HELLO WORLD" type tings...

Let's say you don't want to run it on local environment. That's fine too because you can use the cloud version - Google Colab. You can work on the cloud, upload files and load files in from Google Drive. You can work on it at home with one computer and then go into the office and work on it with another computer and a different OS. You can write in Markdown and format equations using LaTeX.

As an interactive notebook there are so many opportunities for storytelling and documentation with Jupyter Notebook. And if you like things to be pretty, you can style both the notebook itself or style the outputs with css.

Sketch RNN

I followed the Sketch RNN tutorial on Google Colab to produce the following Bus turning into a Cat...

Love the Quick Draw project because it is so much like the story I often tell about how I used to quiz people about what they thought a scallop looked like because I realised many Singaporeans think that it is a cake instead of a shellfish with a "scalloped edge shell".

I love the shonky-ness of the drawings and I kinda wanna make my own data set to add to it, and perhaps the shonky-ness is something I can amplify with my extremely shonky usb drawing robot which could use the vector data to make some ultra shonky drawings in the flesh.

Now that I have accidentally wrote the word shonky so many times I feel I should define what I mean: "shonky" means that the output is of dubious quality, and for me the term also has a certain comedic impact, like an Eraserhead baby moment which ends in nervous laughter. (Another word I like to use interchangeably with "shonky" is the Malay word "koyak" which I also imagine to have comedic impact)

Eg: When Tree Trunks explodes unexpectedly...

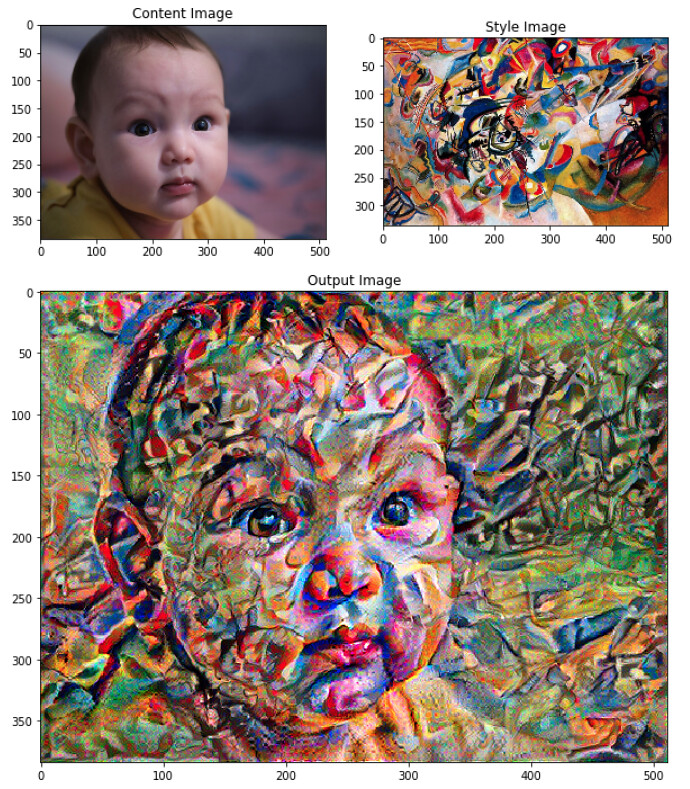

Neural Style Transfer

I followed the Neural Style Transfer using tensorflow and keras tutorial on Google Colab to produce the following:

Beano x Hokusai

Beano x Van Gogh's Starry Night

Beano x Kandinsky

Beano x Ghost in the Shell

Beano x Haring

Beano x Tiger

Beano x Klee

How does this work? In the paper it describes how you can try to find out what is the style of an image by including feature correlations of multiple layers in order to obtain a multi-scale representation of the original input image, thus capturing its texture information but not the global arrangement. The higher levels capture the high-level content in terms of objects and their arrangement in the input image but do not constrain the exact pixel values of the reconstruction.

Image Source: "A Neural Algorithm of Artistic Style" by Leon A. Gatys, Alexander S. Ecker, Matthias Bethge

No comments:

Post a Comment